In some cases, the relationship between variables in our data may not be well described by a simple polynomial function. When this happens, we need to use more flexible methods that allow us to fit arbitrary functions to our data. One such method is the curve_fit function from the SciPy library, which enables us to fit user-defined functions to our data using non-linear least squares optimization.

Using curve_fit in SciPy:¶

The curve_fit function in SciPy is a powerful tool for fitting arbitrary functions to data using non-linear least squares optimization. It takes as input a user-defined model function, along with the observed data and optional initial parameter estimates, and returns the optimal parameter values that minimize the sum of squared residuals between the model predictions and the observed data.

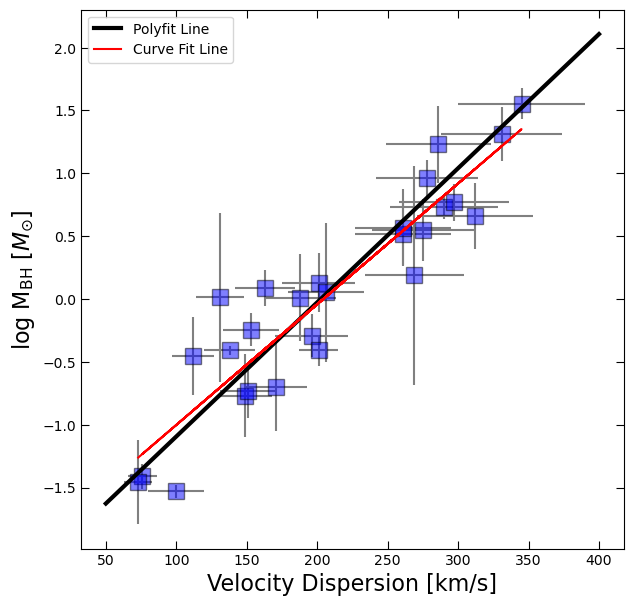

Example: Fitting a Line to the M-sigma Relation using curve_fit¶

# First, copy our code from last time to read in the m-sigma data and fit a line with polyfit

import numpy as np

import pandas as pd

import matplotlib.pyplot as plt

df = pd.read_csv('m-sigma.txt')

log_BH = np.log10(df.M_bh)

BH_err = df.M_bh_err

coefficients = np.polyfit(df.sigma,log_BH,deg=1,w=1/BH_err)

x_fit = np.linspace(50,400,100)

y_fit = np.polyval(coefficients, x_fit)from scipy.optimize import curve_fit

# Define a linear model function (y = mx + b)

def linear_model(x, m, b):

return m * x + b

# Perform curve fitting to estimate parameters (slope and intercept)

popt, pcov = curve_fit(linear_model, df.sigma, log_BH)

# Extract estimated parameters

slope, intercept = poptWe usually call the outputs of curve_fit popt and pcov. These represent:

popt: This variable holds the best estimates of the parameters (e.g., slope and intercept) for the fitted model. It’s what we use to get the actual values of the parameters we’re interested in. The ordering ofpoptmatches the ordering of the paramters in ourlinear_modeluser defined function after the first arguement (which is the data x).pcov: This variable represents the covariance matrix, which tells us about the uncertainty or correlation between the estimated parameters. It helps us understand how reliable our parameter estimates are. Specifically, it provides information about how much the estimated parameters vary together. If two parameters are highly correlated, changes in one may influence the estimate of the other. For now, we can ignore this.

fig, ax = plt.subplots(figsize=(7,7))

ax.errorbar(df.sigma, log_BH, yerr=BH_err, xerr=df.sigma_err, fmt='None', color='gray')

ax.plot(df.sigma, log_BH, 's', color='blue', ms=12, alpha=0.5, mec='k')

ax.plot(x_fit, y_fit, 'k', lw=3, label = 'Polyfit Line')

ax.plot(df.sigma, linear_model(df.sigma, slope, intercept), color='red', label='Curve Fit Line')

ax.set_xlabel('Velocity Dispersion [km/s]', fontsize=16)

ax.tick_params(which='both', top=True, right=True, direction='in', length=6)

ax.set_ylabel(r'log M$_{\rm BH}$ [$M_{\odot}$]', fontsize=16)

ax.legend();

Note: these fits may be slightly different because we are not incorporating errors in the fit using curve_fit.

A Common Use-Case: Gaussian Fitting¶

Gaussian fitting, also known as Gaussian curve fitting or Gaussian distribution fitting, is a statistical method used to model data that follows a Gaussian (normal) distribution. A Gaussian distribution is characterized by its bell-shaped curve, which is symmetrical around the mean and describes the probability distribution of a continuous random variable. Gaussian fitting involves estimating the parameters of a Gaussian function, such as the mean, standard deviation, and amplitude, that best describe the observed data.

Gaussian fitting is important in many aspects of astronomy, as we expect many distributions to be well-described by Gaussians. A Gaussian can be expressed as:

- Mean (μ): The center or average value of the Gaussian distribution.

- Standard Deviation (σ): The measure of the spread or dispersion of the Gaussian distribution.

- Amplitude (A): The peak height or maximum value of the Gaussian curve.

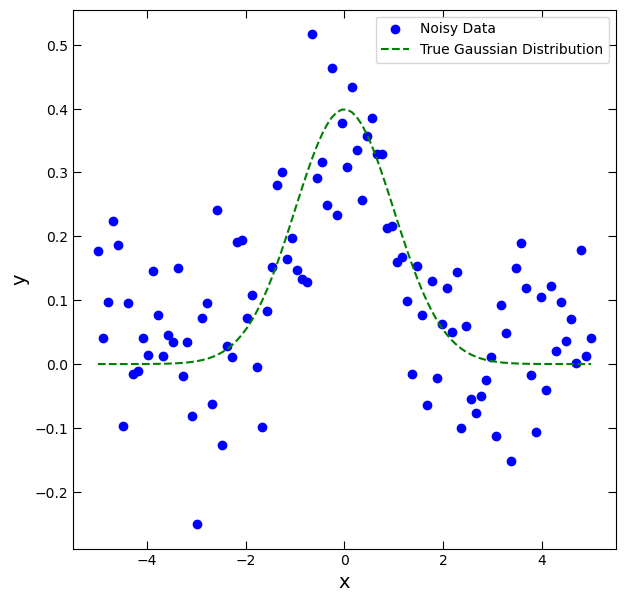

import numpy as np

import matplotlib.pyplot as plt

from scipy.optimize import curve_fit

# Generate synthetic data following a Gaussian distribution

x = np.linspace(-5, 5, 100)

y_true = np.exp(-0.5 * (x ** 2)) / np.sqrt(2 * np.pi) # True Gaussian distribution

# Add noise to the data to simulate experimental measurements

np.random.seed(0)

y_noise = y_true + np.random.normal(loc=0, scale=0.1, size=len(x))fig, ax = plt.subplots(figsize=(7,7))

ax.scatter(x, y_noise, color='blue', label='Noisy Data')

ax.plot(x, y_true, linestyle='--', color='green', label='True Gaussian Distribution')

ax.set_xlabel('x', fontsize=14)

ax.set_ylabel('y', fontsize=14)

ax.tick_params(which='both', top=True, right=True, direction='in', length=6)

ax.legend();